AI21 Labs has introduced a significant advancement in the field of large language models with the release of Jamba.

This model utilizes a novel hybrid architecture that merges Transformer layers with Mamba layers, achieving state-of-the-art performance while addressing the memory constraints typically associated with large-scale language models. This blog delves into Jamba's architecture, key features, and the implications of this technology in the AI landscape.

Hybrid Architecture: Combining Transformers and Mamba Layers

.png)

At the core of Jamba's innovation is its hybrid architecture, which combines traditional Transformer layers with Mamba layers. Transformers, while powerful, are known for their substantial memory requirements due to the need for large caches of key-value pairs during processing. Mamba layers are designed to alleviate this burden by reducing the overall memory footprint without compromising performance.

In Jamba's architecture, Mamba layers are interleaved with Transformer layers in a carefully tuned ratio (e.g., 1:7). This strategic alternation optimizes the model’s performance while significantly lowering memory usage. The result is a model that can handle more extensive datasets and longer contexts, with improved efficiency compared to models solely relying on Transformers.

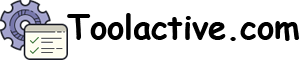

Mixture-of-Experts (MoE) Modules: Enhancing Capacity Without Increasing Costs

Another standout feature of Jamba is the integration of Mixture-of-Experts (MoE) modules. These modules allow Jamba to scale its capacity by selectively activating expert layers during inference, rather than uniformly applying all layers. This approach increases the model’s capacity without a proportional rise in computational costs.

.png)

MoE routing in Jamba ensures that only the most relevant expert layers are activated for a given input, thereby optimizing computational resources. This selective activation not only enhances efficiency but also enables the model to tackle a wider range of tasks with greater accuracy.

Performance and Benchmarking

Jamba has been rigorously tested against various benchmarks, where it demonstrated exceptional performance. It achieved state-of-the-art results on benchmarks such as HellaSwag, WinoGrande, and ARC, showcasing its robustness across a diverse set of tasks. Additionally, Jamba excelled in the Massive Multitask Language Understanding (MMLU) benchmarks, further cementing its position as a leading language model.

One of Jamba’s most notable achievements is its throughput performance, particularly with large batches and long contexts. Using the Needle-in-Haystack benchmark, Jamba handled contexts of up to 256K tokens with ease, outperforming its peers in terms of both speed and accuracy.

Fine-Tuning and Accessibility

Jamba’s versatility extends beyond its out-of-the-box capabilities. It can be fine-tuned for specialized domains and tasks using libraries like PEFT (Parameter-Efficient Fine-Tuning). This makes Jamba an adaptable tool for a wide range of applications, from generating English quotes to more domain-specific tasks.

.png)

AI21 Labs has made Jamba accessible through various cloud platforms and frameworks, including integration with HuggingFace. Developers can easily load the model, generate outputs, and structure those outputs using the Python interface and associated tools. Additionally, AI21 provides custom solutions and model tuning services, ensuring that Jamba can be tailored to meet the specific needs of diverse users.

Responsible Use and Compliance

While Jamba offers immense power and flexibility, it is crucial to use this technology responsibly. AI21 Labs emphasizes the importance of implementing proper safeguards, bias mitigation strategies, and ensuring compliance with data privacy laws, especially for cloud deployments. The responsible use of Jamba is essential to prevent misuse and to uphold ethical standards in AI development and deployment.

The Bottom Lines

Jamba represents a significant leap forward in the evolution of large language models. By combining the strengths of Transformers with the efficiency of Mamba layers and the scalability of Mixture-of-Experts modules, Jamba pushes the boundaries of what is possible in AI.

Its state-of-the-art performance, reduced memory usage, and adaptability make it a valuable tool for developers and researchers alike. However, as with any powerful technology, responsible use and adherence to ethical guidelines are paramount to fully realizing its potential.